DSCI 311

Optimization and Mathematical Foundations for Data Science

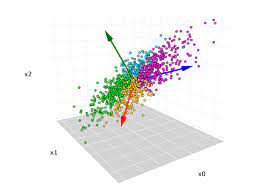

This course will cover the most fundamental tools from calculus, linear algebra, probability, statistics, and optimization used in data science. These tools will be introduced in the context of studying unsupervised and supervised learning problems, principle component analysis, maximum liklihood estimation, linear and logistic regression, support vector machines, and neural network training. The specific tools covered from probability and statistics include random variables, expectation, high-order moments, conditional probability, and Bayes’ rule. Specific topics in optimization include convexity, (non)smoothness, the basics of convex analysis and optimization, optimality conditions, gradient descent, convergence (rates), and stochastic gradient method.